-

-

Notifications

You must be signed in to change notification settings - Fork 2.7k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Unable to specify a default value for a generic parameter #3737

Comments

|

I think the problem here is that in general, e.g. if there are other parameters also using To make this work, you can use @overload

def foo() -> int: ...

@overload

def foo(a: _T) -> _T: ...

def foo(a = 42): # Implementation, assuming this isn't a stub

return aThat's what we do in a few places in typeshed too (without the implementation though), e.g. check out |

|

I'm running in to a similar issue when trying to iron out a bug in the configparser stubs. Reducing RawConfigParser to the relevant parts gives: _section = Mapping[str, str]

class RawConfigParser:

def __init__(self, dict_type: Mapping[str, str] = ...) -> None: ...

def defaults(self) -> _section: ...This is incorrect at the moment, because _T1 = TypeVar('_T1', bound=Mapping)

class RawConfigParser(Generic[_T1]):

def __init__(self, dict_type: Type[_T1] = ...) -> None: ...

def defaults(self) -> _T1: ...but when you don't pass a dict_type, mypy can't infer what type it should be using and asks for a type annotation where it is being used. This is less than ideal for the default instantiation of the class (which will be what the overwhelming majority of people are using). So I tried adding the appropriate default to the definition: _T1 = TypeVar('_T1', bound=Mapping)

class RawConfigParser(_parser, Generic[_T1]):

def __init__(self, dict_type: Type[_T1] = OrderedDict) -> None: ...

def defaults(self) -> _T1: ...but then I hit the error that OP was seeing: This feels like something that isn't currently supported, but I'm not sure if I'm missing a way that I could do this... |

|

@OddBloke |

|

@ilevkivskyi I don't follow, I'm afraid; could you expand on that a little, please? |

|

Consider this code: class UserMapping(Mapping):

...

RawConfigParser[UserMapping]()In this case |

|

@ilevkivskyi Thanks, that makes sense. Do you think what I'm trying to do is unrepresentable as things stand? |

|

I didn't think enough about this, but my general rule is not to be "obsessed" with precise types, sometimes |

|

Allowing default values for generic parameters might also enable using type constraints to ensure that generics are only used in specified ways. This is a bit esoteric, but here is an example of how you might use the same implementation for a dict and a set while requiring that consumers of the dict type always provide a value to class Never(Enum):

"""An uninhabited type"""

class Only(Enum):

"""A type with one member (kind of like NoneType)"""

ONE = 1

NOT_PASSED = Only(1)

class MyGenericMap(Generic[K, V]):

def insert(key, value=cast(Never, NOT_PASSED)):

# type: (K, V) -> None

...

if value is NOT_PASSED:

....

class MySet(MyGenericMap[K, Never]):

pass

class MyDict(MyGenericMap[K, V]):

pass

my_set = MySet[str]()

my_set.insert('hi') # this is fine, default value matches concrete type

my_set.insert('hi', 'hello') # type error, because 'hello' is not type never

my_dict = MyDict[str, int]()

my_dict.insert('hi') # type error because value is type str, not Never

my_dict.insert('hi', 22) # this is fine, because the default value is not used |

|

Huh, this just came up in our code. |

|

This request already appeared five times, so I am raising priority to high. I think my preferred solution for this would be to support lower bounds for type variables. It is not too hard to implement (but still a large addition), and at the same time it may provide more expressiveness in other situations. |

|

A second part for the workaround with overload should be noted: The header of implementation of the overloaded function must be without a generic annotation for that variable, but it is not acceptable for more complex functions where the body should be checked. Only the binding between input and output types is checked by overloading. The most precise solution for the body seems to encapsulate it by a new function with the same types, but without a default. @overload

def foo() -> int: ...

@overload

def foo(a: _T) -> _T: ...

def foo(a = 42): # unchecked implementation

return foo_internal(a)

def foo_internal(a: _T) -> _T:

# a checked complicated body moved here

return aAlmost everything could be checked even in more complicated cases, but the number of necessary overloaded declarations could rise exponentially by EDIT |

|

Another solution is to use a broad static type for that parameter and immediately assign it in the body to the original exact generic type. It is a preferable solution for a class overload. _T = TypeVar('_T', list, dict)

class Cursor(Generic[_T]):

@overload

def __init__(self, connection: Connection) -> None: ...

@overload

def __init__(self, connection: Connection, row_type: Type[_T]) -> None: ...

def __init__(self, connection: Connection, row_type: Type=list) -> None:

self.row_type: Type[_T] = row_type # this annotation is important

...

def fetchone(self) -> Optional[_T]: ...

def fetchall(self) -> List[_T]: ... # more methods depend on _T type

cursor = Cursor(connection, dict) # cursor.execute(...)

reveal_type(cursor.fetchone()) # dict |

Wait. Why is that a problem? If default value doesn’t work for some calls, that should be a type error at the call site. _T = TypeVar('_T')

def foo(a: List[_T] = [42], b: List[_T] = [42]) -> List[_T]:

return a + b

foo() # This should work.

foo(a=[17]) # This should work.

foo(b=[17]) # This should work.

foo(a=["foo"]) # This should be a type error (_T cannot be both str and int).

foo(b=["foo"]) # This should be a type error (_T cannot be both int and str).

foo(a=["foo"], b=["foo"]) # This should work.

Only if we retain the requirement that the default value always works, which is not what’s being requested here. The desired result is: _T1 = TypeVar('_T1', bound=Mapping)

class RawConfigParser(_parser, Generic[_T1]):

def __init__(self, dict_type: Type[_T1] = OrderedDict) -> None: ...

def defaults(self) -> _T1: ...

class UserMapping(Mapping):

...

RawConfigParser[OrderedDict]() # This should work.

RawConfigParser[UserMapping]() # This should be a type error (_T1 cannot be both UserMapping and OrderedDict).

RawConfigParser[UserMapping](UserMapping) # This should work. |

|

I ran into this trying to write _T = TypeVar('_T')

def str2int(s:str, default:_T = None) -> Union[int, _T]: # error: Incompatible default for argument "default" (default has type "None", argument has type "_T")

try:

return int(s)

except ValueError:

return default(after figuring out that I need @overload

def str2int(s:str) -> Optional[int]: ...

@overload

def str2int(s:str, default:_T) -> Union[int, _T]: ...

def str2int(s, default = None):

the_body_is_no_longer_type_checkedI think this raises the bar for type-checking newbies. |

|

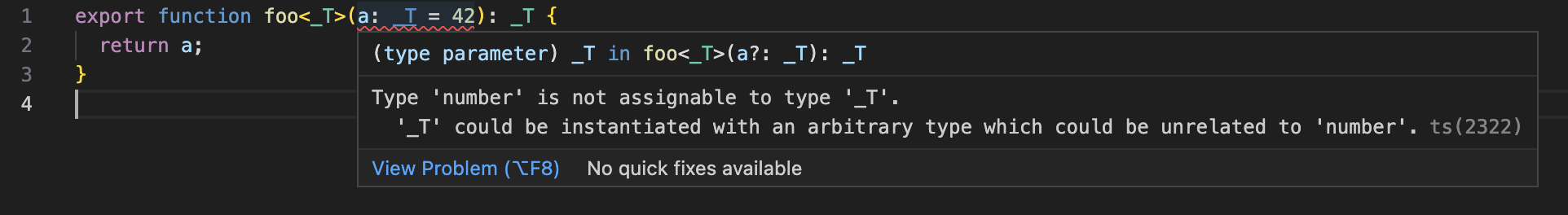

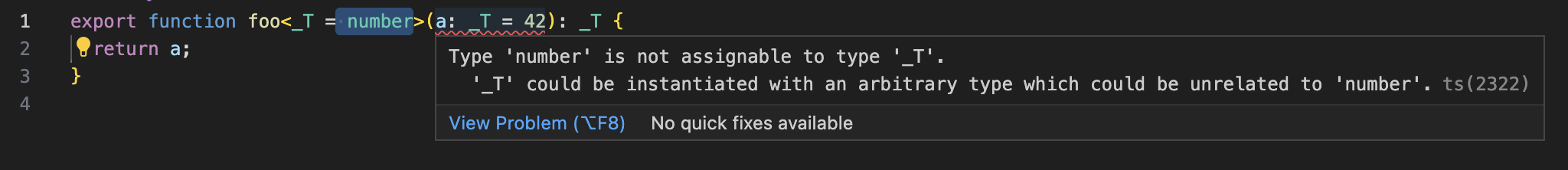

To @andersk's point, I also don't see how PEP 696 makes any difference here. The For what its worth, Typescript also does not support this pattern: And adding a type variable default in Typescript does not "fix" the issue: That's not to say that Python typing is for any reason bound by what Typescript can do, just that this limitation is not a peculiarity of Python or mypy. It seems to me that a final decision should be made here and this should be documented so developers don't need to find this thread to sort it out. |

Sorry, I mistakenly brought up PEP 696. I hadn't looked at this issue carefully since I subscribed to it, and forgot what it was actually about. |

|

I'm currently doing some experimentation in the hope of eventually addressing this. |

|

I haven't made much progress with an implementation yet, but here are some notes based on what I've learned so far (much of this is also covered above in the discussion, but I want so summarize everything in one comment):

|

|

Yeah, this is essentially a plan I had in mind a while ago. But after all it seemed to me this would cause a visible performance impact even with all the possible optimizations. OTOH it may be fine with all the recent performance improvements. Btw few more points to keep in mind:

|

Unfortunately a generic type annotation with a default triggers an existing mypy limitation (python/mypy#3737). The current workaround is to use overloads and then not annotate the implementation containing the default parameter; this still enables mypy to deduce correct return types. Two overloads are added for Series.to_dict, even though they could be combined using a Union type, as at least two overloads are required for a single method.

* Correct type annotation for to_dict. The `into` argument of DataFrame.to_dict and Series.to_dict can be either a class or instance of a class of dict; this is covariant - subclasses of dict can also be used. The argument was annotated as `type[dict]` though, so type checkers marked passing initialized objects (required for collections.defaultdict) as an incorrect argument type. Fix by annotating `into` to take either a subclass of dict or an initialized instance of a subclass of dict. * Use generic MutableMapping type for to_dict method. Unfortunately a generic type annotation with a default triggers an existing mypy limitation (python/mypy#3737). The current workaround is to use overloads and then not annotate the implementation containing the default parameter; this still enables mypy to deduce correct return types. Two overloads are added for Series.to_dict, even though they could be combined using a Union type, as at least two overloads are required for a single method. * Fix formatting * return annotation for non-overload * no keyword should return dict * swap overload order to work for dict subclasses that are passed as keywords * fix tests --------- Co-authored-by: Torsten Wörtwein <twoertwein@gmail.com>

May I ask if there's a little more detail on this design decision? Why are funciton implementations not checked for overloads? Searches on the topic are giving me blanks. I'm curious why mypy would not check a defanition for each overload variant. It would only need to revist one function per overload so I can't see the cost would be that high, nor the implementation that complex. I wonder what I'm missing? |

Simplified Example:

Real World example is something closer to:

The text was updated successfully, but these errors were encountered: