-

Notifications

You must be signed in to change notification settings - Fork 3.3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Test retries, when retrying on several failed tests, causes hanging in cypress run

#9040

Comments

|

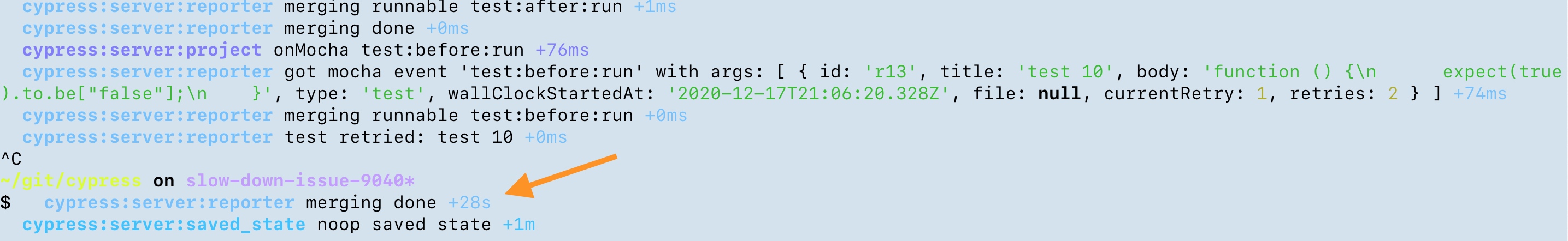

I don't have an example to reproduce but this happened to us as well. Cypress hung indefinitely in a test spec after retrying a test case. We turned off retries, and the test spec runs to completion. I ran with After ~20 or so of these tables being spit out, the last one before I quit the run was: |

|

Likely this issue is very specific to the structure of the test code, perhaps the combination of hooks and suites, or the test code itself. We don't have anything to investigate until a reproducible example is provided. Here are some tips for providing a Short, Self Contained, Correct, Example and our own Troubleshooting Cypress guide. |

|

We're seeing the same problem in Cypress 5.5 using Chrome 83 on Ubuntu 20.04--I had three different Cypress projects all start hanging shortly after upgrading to 5.5 and enabling retries. Removing the retries configuration immediately resolved the issue. Some observations:

And I think I just got a reproducible example: spec file: describe('page', () => {

for (let i = 0; i < 200; i++) {

it('fails', () => {

expect(true).to.be.false;

});

}

});

The above works fine using |

|

@jennifer-shehane this was supposed to be the marquee feature of Cypress 5. I have a trivial example above that reproduces the hang, but it's now been 10 days with no response and Cypress 6 coming out in that time. I love Cypress, but the pattern of lack of responsiveness to, let alone fixing of existing issues in the apparent rush to deliver new features is getting to be a real problem for us.

To be frank, as a professional tester I'm disappointed that literally the first thing I tried to reproduce the hang worked. I'm even more disappointed that the Cypress team didn't even try something simple like this and instead made the assumption that it was an obscure edge case. To me, that suggests that new Cypress features aren't really being subjected to more than shallow confirmatory testing, which for a testing tool is scary. Sorry for the rant. Again, I've been using Cypress for a couple years now and it's overall been a positive experience. I just haven't known how best to voice my frustration with the seeming lack of support and not knowing which bug reports will just disappear into a black hole. Please take this as constructive feedback from a tester who would love to see Cypress become even better. |

I'm facing exact same issue.. could it be CPU or memory restrains? My logs before it just hangs and prints tons of cpu&memory usage: |

|

We have lots of tests on retries, but likely not retrying upwards of 10 times. Perhaps that is the case causing the hanging. Trying every iteration of test suites is something that is not possible and why we have bug reports for users. Thanks for providing this example and I understand the frustration. We're doing what we can. I can reproduce the hanging with the example. We haven't previously seen this behavior with regards to retries. Each retry slows down the run. You can even see this if you add retries: 4 with the example below. Each test gets slower and slower until it hangs. This has been present since 5.0 describe('page', () => {

for (let i = 0; i < 10; i++) {

it(`test ${i}`, () => {

expect(true).to.be.false

})

}

})DEBUG logs with video turned off: run.log This is a weird log at the end when video was turned on, but doesn't explain the locking up when video is turned off: I tried to reduce the problem down. A few things that did not work in preventing the hanging.

|

cypress run

|

Recreated the tests slowing down and hanging for Jennifer's example in https://github.com/cypress-io/cypress-test-tiny/tree/retries-slowdown-9040 Interesting that the test is really slow in the phase "No commands were issued in this test." When running with |

|

Note: repeating the same test via retries does not seem to show unusual slowdown describe('page', () => {

for (let i = 0; i < 1; i++) {

it(`test ${i}`, { retries: 140 }, () => {

expect(true).to.be.false

})

}

})Keep running pretty snappy. On the other repeating 2 retries per test slows down a lot even after 10 tests describe('page', () => {

for (let i = 0; i < 100; i++) {

it(`test ${i}`, { retries: 2 }, () => {

expect(true).to.be.false

})

}

}) |

|

Went to the suspicious code in lib/reporter.js const mergeRunnable = (eventName) => {

return (function (testProps, runnables) {

debug('merging runnable %s', eventName)

toMochaProps(testProps)

const runnable = runnables[testProps.id]

if (eventName === 'test:before:run') {

if (testProps._currentRetry > runnable._currentRetry) {

debug('test retried:', testProps.title)

const prevAttempts = runnable.prevAttempts || []

delete runnable.prevAttempts

const prevAttempt = _.cloneDeep(runnable)

delete runnable.failedFromHookId

delete runnable.err

delete runnable.hookName

testProps.prevAttempts = prevAttempts.concat([prevAttempt])

}

}

const merged = _.extend(runnable, testProps)

debug('merging done')

return merged

})

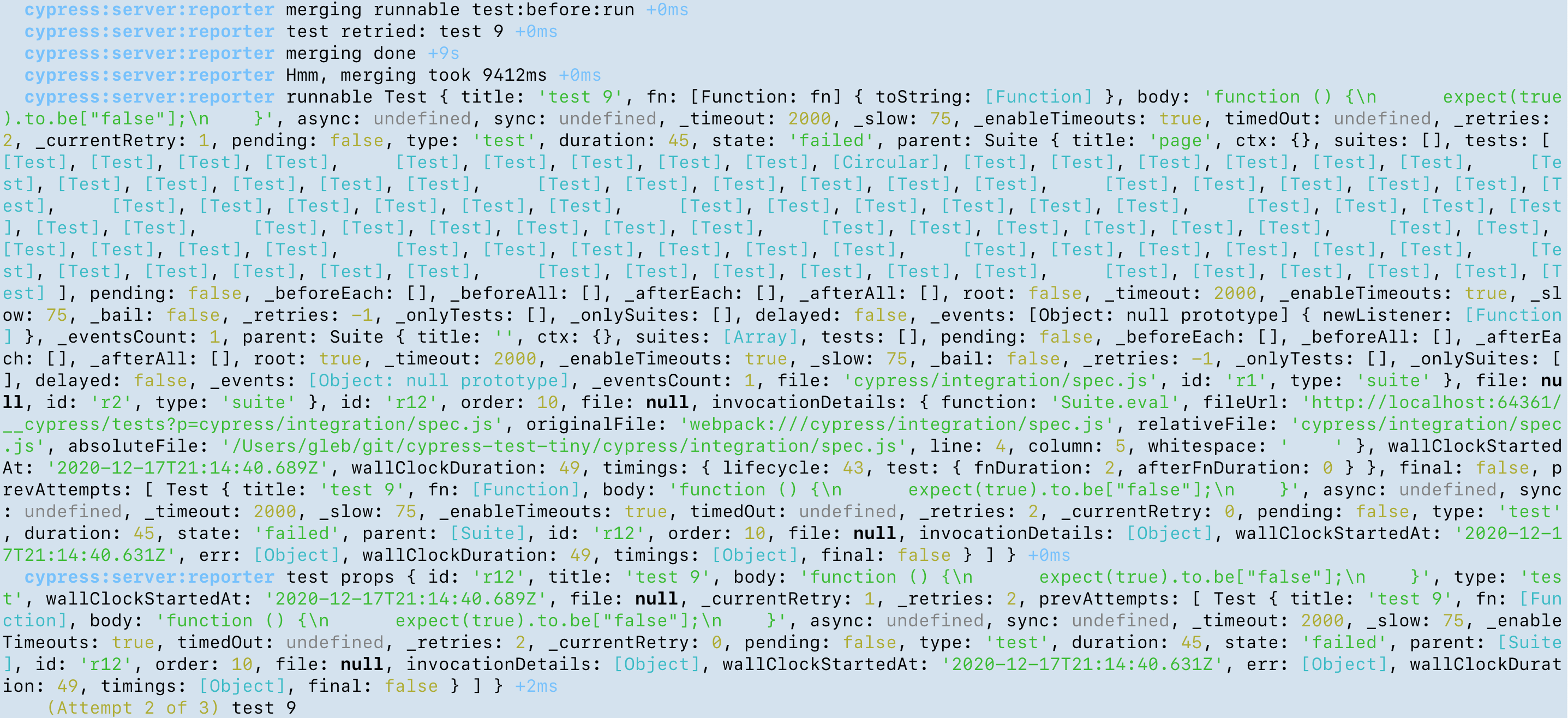

}Added debug logs to see how long it takes to merge events - seems the pauses in the test runner align with the messages about merging |

|

Added timing to the merge code in #14222 and saw the following The test keeps all those objects around and the merge is slow. Maybe we should do our own lightweight extend here since we know the structure |

|

The slowdown can be attributed to this line cypress/packages/server/lib/reporter.js Line 146 in 7d4e38e

The runnable is becoming larger and larger |

|

The code for this is done in cypress-io/cypress#14381, but has yet to be released. |

|

Released in This comment thread has been locked. If you are still experiencing this issue after upgrading to |

Hi,

I am running cypress and tests using docker inside the Jenkins pipeline. Unfortunately when the test failing cypress is hanging on with test retries attempt I suppose that in both attempts the test fails but no idea why cypress didn't go on after it. Could anyone help me with this or explain if it is a bug or wrong usage? I am trying to search for any issue with this case but didn't find it.

Screen from the pipeline:

I've experimented with different ways for adding test retries to my tests in many ways:

//and in global

cypress.json{ "retries": { "runMode": 1, "openMode": 3 } }docker Image:

docker run --rm --ipc=host -m 4GB -e CYPRESS_VIDEO=false

--entrypoint=npm ".../cypress-test:5.5.0-1"

run testOnJenkins -- --env configFile="cypress-$ENVIRONMENT_ID"

The text was updated successfully, but these errors were encountered: