-

Notifications

You must be signed in to change notification settings - Fork 1.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Messaging] Reduce allocation for Send by using RedableBuffer #34591

Conversation

|

API change check API changes are not detected in this pull request. |

sdk/core/azure-core-amqp/src/main/java/com/azure/core/amqp/implementation/ReactorSender.java

Outdated

Show resolved

Hide resolved

sdk/core/azure-core-amqp/src/main/java/com/azure/core/amqp/implementation/ReactorSender.java

Outdated

Show resolved

Hide resolved

...core/azure-core-amqp/src/main/java/com/azure/core/amqp/implementation/RetriableWorkItem.java

Outdated

Show resolved

Hide resolved

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks!

-

CHANGELOG entry

-

Does this work on our integration tests for both languages?

-

Since this affects both SB and EH... Do we happen to have some data on how much this saves when moving one from the other? I think it would be great to have so we feel confident that this improves things. :)

sdk/core/azure-core-amqp/src/main/java/com/azure/core/amqp/implementation/ReactorSender.java

Outdated

Show resolved

Hide resolved

|

/azp run java - servicebus - tests |

|

Azure Pipelines successfully started running 1 pipeline(s). |

|

/azp run java - eventhubs - tests |

|

Azure Pipelines successfully started running 1 pipeline(s). |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Measuring batch send allocation

I ran a couple of memory measurements, and the new changes reduced the memory and total GC count; this gives good confidence in the new approach—the more the entity's configured maximum message size, the more visible the savings.

For memory measurements, we

- Set -Xmx3072m. 3 to 5GB is a typical container allocation.

- The application sends a batch with a total size of 1KB, containing four messages. The app uses 20 threads to invoke send API concurrently after warmup.

- Used JFR to capture 10 minutes of profiling, which is good enough to see the allocation pattern.

- Measured for max message size of 25mb and 1mb.

See the details below -

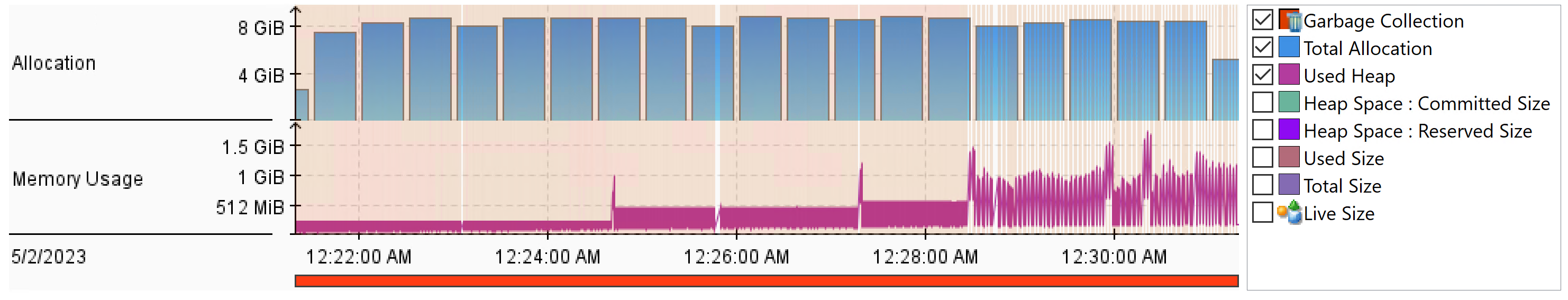

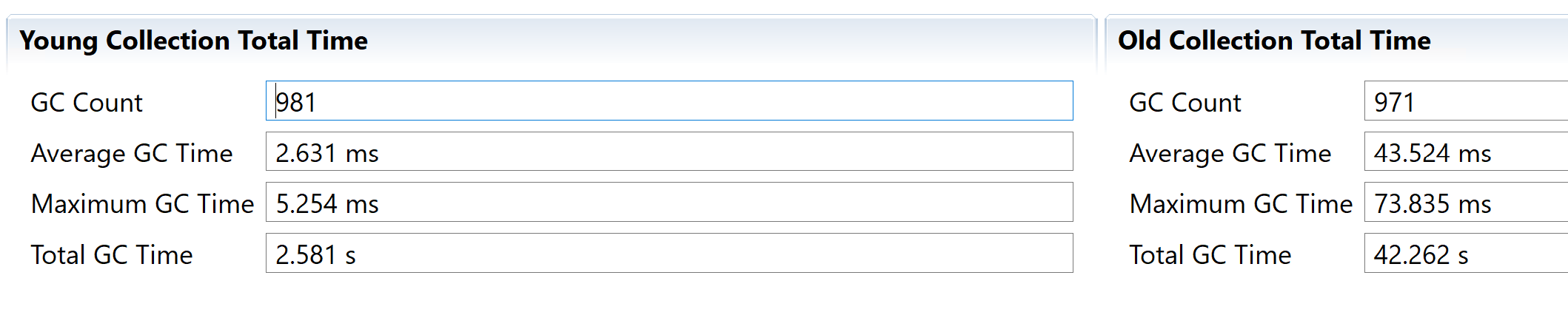

Max-message-size:25mb

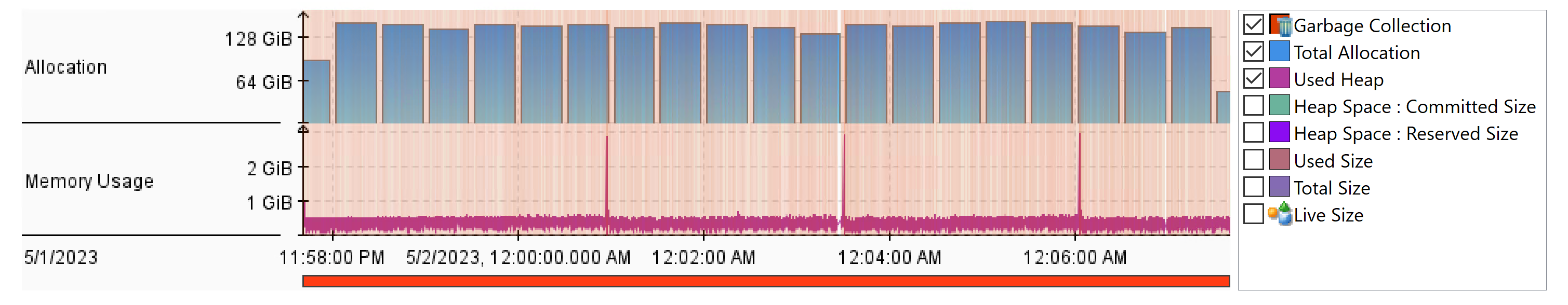

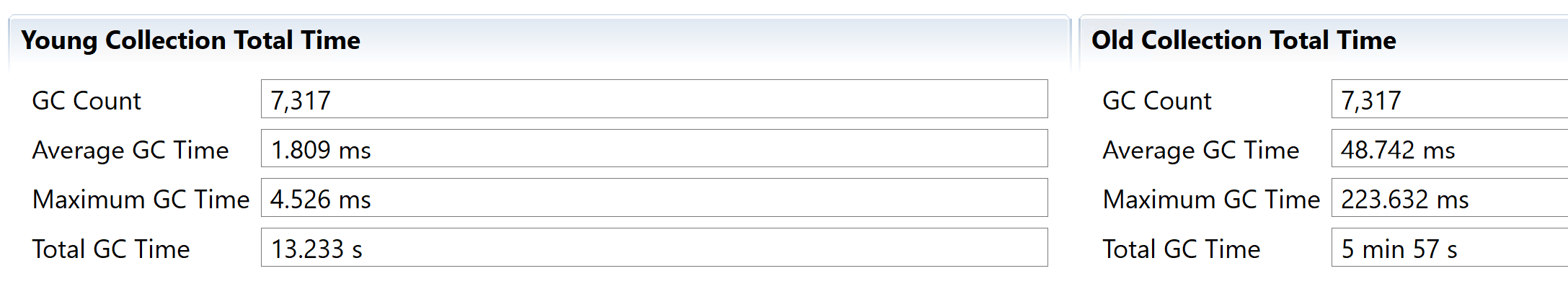

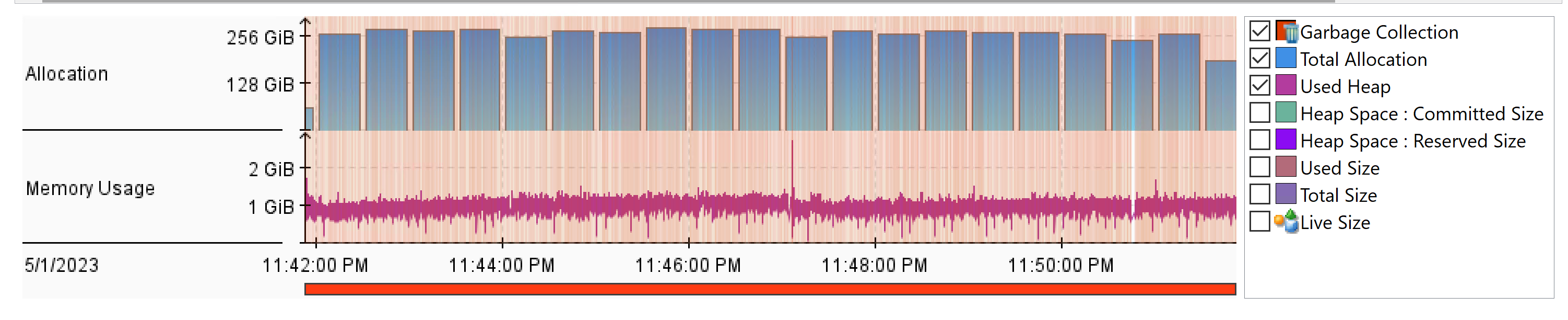

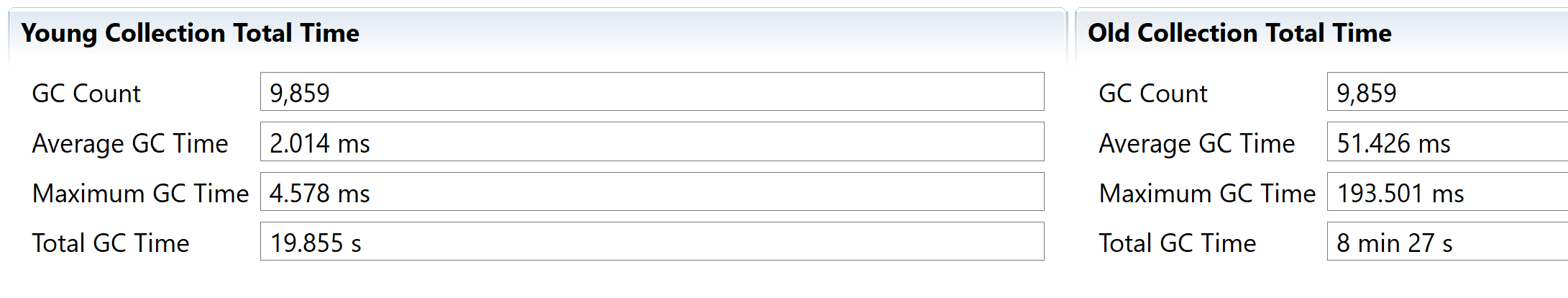

With the new approach, the memory consumption for batch send, on average, stayed below ~650 MB. With the old, it's around 1.3 GB. Also, the GC count is relatively less in the new approach.

| Metrics | New | Old |

|---|---|---|

| Average memory | ~650MB | ~1.3GB |

| Total Memory | ~150GB | ~256GB |

| GC Count | 7317 | 9859 |

New approach

Old Approach

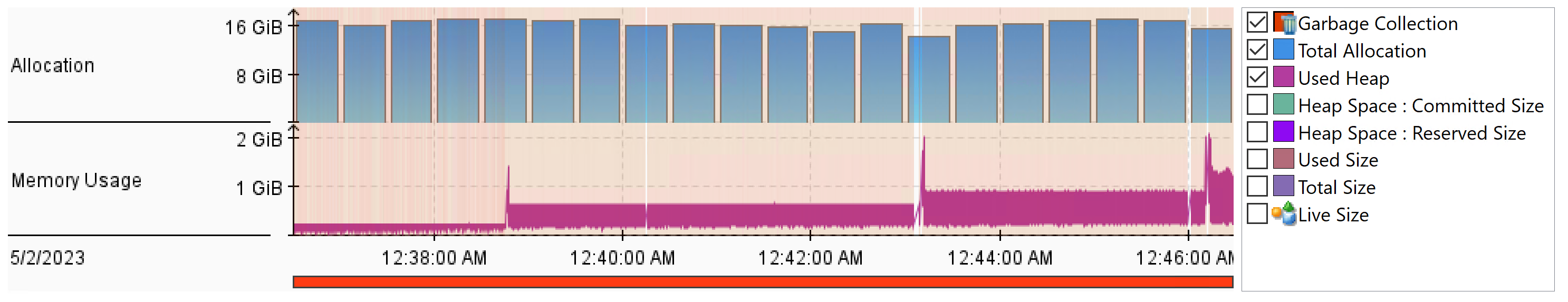

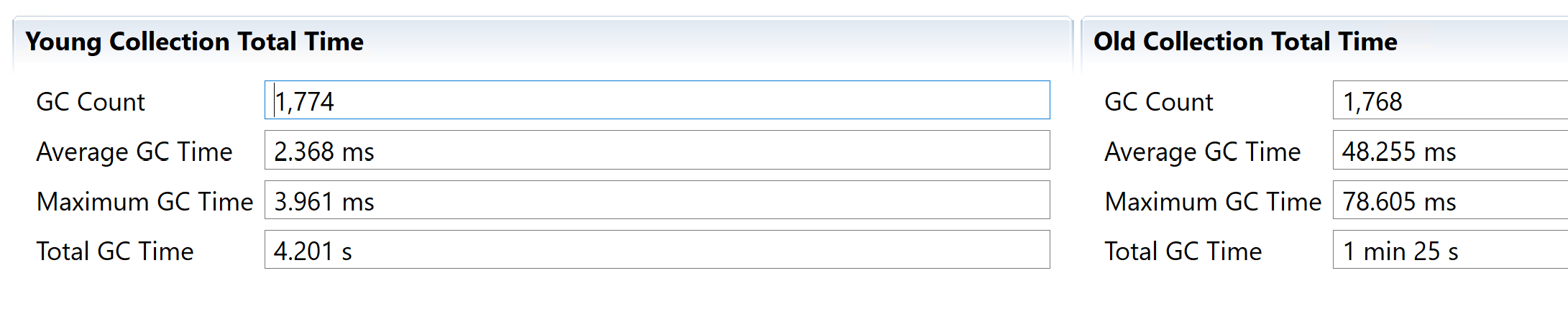

Max-message-size:1mb

1MB is the minimum configurable size for the Service Bus entity. For Event Hubs, 1 MB is the default, and Event Hubs does not allow users to override this default max message size.

With the new approach, the memory consumption for batch send, at its peak, is ~1.7 GB. With the old, it's around 2.1 GB. Also, the GC count is relatively less in the new approach.

| Metrics | New | Old |

|---|---|---|

| Peek Memory | ~1.7GB | ~2.1GB |

| Total Memory | ~8GB | ~16GB |

| GC Count | 981 | 1774 |

New approach

Old Approach

Overall, the new approach is showing good results for batch send.

The code for memory measurement.

Measuring non-batch send allocation

The non-batch send (i.e., single byte[]) code path stays the same as the old one, so it is not impacted. See this discussion.

|

/azp run java - servicebus - tests |

|

Azure Pipelines successfully started running 1 pipeline(s). |

|

/azp run java - eventhubs - tests |

|

Azure Pipelines successfully started running 1 pipeline(s). |

sdk/core/azure-core-amqp/src/main/java/com/azure/core/amqp/implementation/ReactorSender.java

Show resolved

Hide resolved

sdk/core/azure-core-amqp/src/main/java/com/azure/core/amqp/implementation/ReactorSender.java

Show resolved

Hide resolved

sdk/core/azure-core-amqp/src/main/java/com/azure/core/amqp/implementation/ReactorSender.java

Outdated

Show resolved

Hide resolved

Co-authored-by: Liudmila Molkova <limolkova@microsoft.com>

…en a message in the batch is empty.

When sending a batch, the EH and SB Sender allocates a byte array upfront with a length equal to the entity's MaxMessageSize. For a Queue entity, the MaxMessageSize can range from 1MB to 100MB. It is an allocation overhead; e.g., the library allocates 50MB (if the entity's MaxMessageSize is 50MB) to send a batch of size 2KB. The current allocation behavior in T1 and T2 libraries are the same.

This PR address this overhead by taking a different approach -

allocation-pattern

Shown below is the memory allocation pattern before and after the new apporach. The Queue used has a Maximum message size of 25MB.

The code used the measurement is below:

expand

Notes on precomputing the byte[] size for encode api

expand

When encoding for Amqp 1.0 spec "amqp:data:binary" format -

The 'FastPathDataType' writes the descriptor codes as below:

After the descriptor codes, the 'BinaryType' writes the binary (byte[]) as below:

Knowing the spec we can precompute the size of byte array to pass to encode api.

Reported issue